Unvibe quickly generates many alternative implementations for functions

and classes you annotate with @ai, and re-runs your unit-tests until

it finds a correct implementation.

This approach has been demonstrated in research and in practice to produce much better results than simply using code-generation alone. For more details, read the related article: Unvibe: Generate code that passes Unit-tests..

It's particularly effective on large projects with decent test coverage.

It works with most AI providers: local Ollama, OpenAI, DeepSeek, Claude, Gemini,

Just add unvibe as a dependency to your project:

pip install unvibe

First define a new function in your existing Python project. Then annotate it with @ai:

Let's implement a Lisp interpreter in Python with Unvibe. We start with creating a lisp.py:

from unvibe import ai

@ai

def lisp(expr: str) -> bool:

"""A simple lisp interpreter compatible with Python lists and functions"""

passNow, let's write a few unit-tests, to define how the function should behave.

In test_list.py:

import unvibe

from lisp import lisp

# You can also inherit unittest.TestCase, but unvibe.TestCase provides a better reward function

class LispInterpreterTestClass(unvibe.TestCase):

def test_calculator(self):

self.assertEqual(lisp("(+ 1 2)"), 3)

self.assertEqual(lisp("(* 2 3)"), 6)

def test_nested(self):

self.assertEqual(lisp("(* 2 (+ 1 2))"), 6)

self.assertEqual(lisp("(* (+ 1 2) (+ 3 4))"), 21)

def test_list(self):

self.assertEqual(lisp("(list 1 2 3)"), [1, 2, 3])

def test_call_python_functions(self):

self.assertEqual(lisp("(list (range 3)"), [0, 1, 2])

self.assertEqual(lisp("(sum (list 1 2 3)"), 6)Now, we can use Unvibe to search for a valid implementation that passes all the tests:

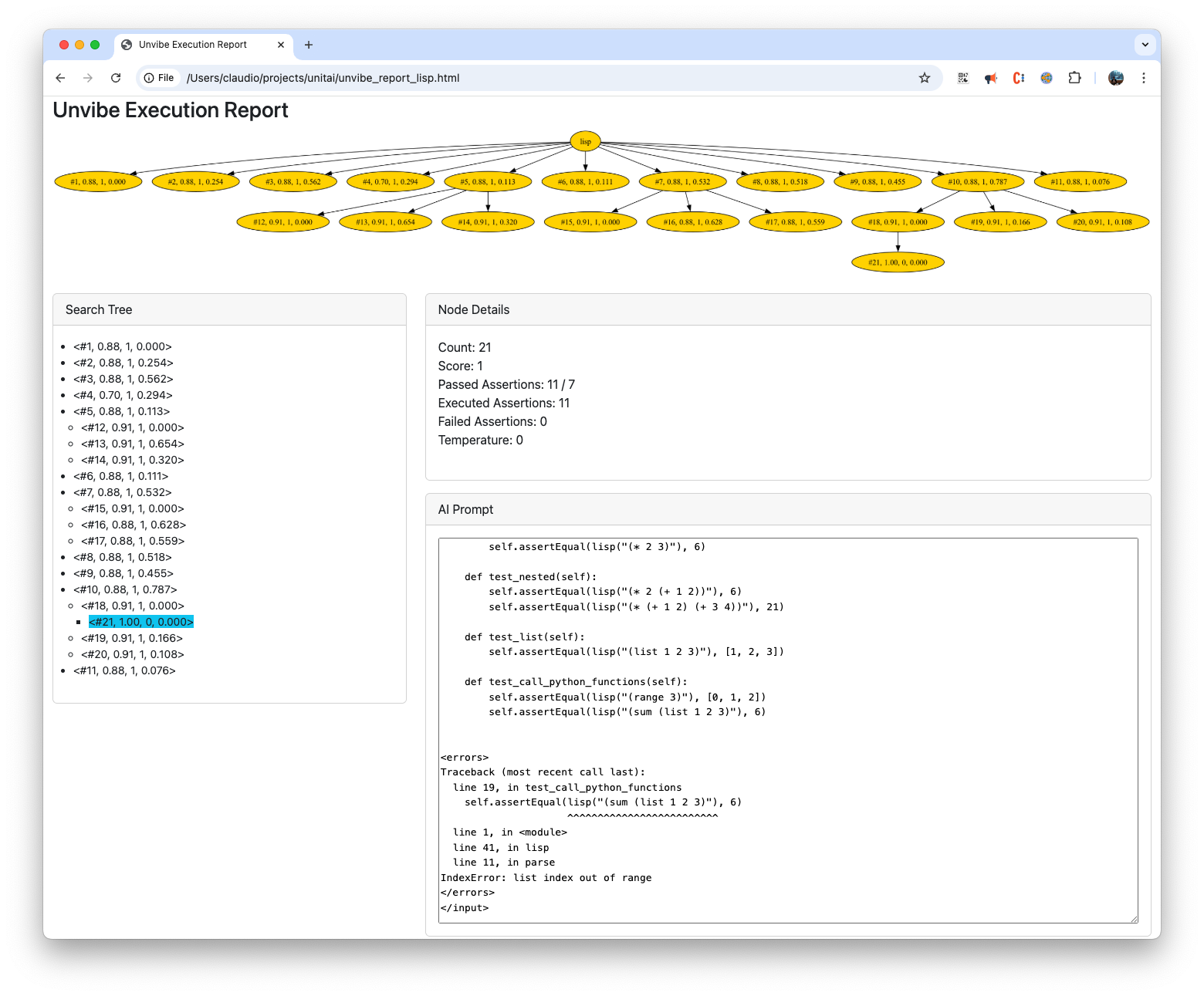

$ python -m unvibe lisp.py test_lisp.py

The library will re-run the tests and generate many alternatives, and keep exploring the ones that pass

more tests, while feeding back the test errors to the LLM. In the end you will find a new file

called unvibe_lisp.py with a valid implementation:

# Unvibe Execution output.

# This implementation passed all tests

# Score: 1.0

# Passed assertions: 7/7

def lisp(exp):

def tokenize(exp):

return exp.replace('(', ' ( ').replace(')', ' ) ').split()

def parse(tokens):

if len(tokens) == 0:

raise SyntaxError('Unexpected EOF')

token = tokens.pop(0)

if token == '(':

L = []

while tokens[0] != ')':

L.append(parse(tokens))

tokens.pop(0) # Remove ')'

return L

elif token == ')':

raise SyntaxError('Unexpected )')

else:

try:

return int(token)

except ValueError:

return token

def evaluate(x):

if isinstance(x, list):

op = x[0]

args = x[1:]

if op == '+':

return sum(evaluate(arg) for arg in args)

elif op == '*':

result = 1

for arg in args:

result *= evaluate(arg)

return result

elif op == 'list':

return [evaluate(arg) for arg in args]

else:

# Call Python functions

return globals()[op](*[evaluate(arg) for arg in args])

return x

tokens = tokenize(exp)

return evaluate(parse(tokens))$ pip install unvibe

Write in your project folder a .unvibe.toml config file.

# For example, to use Claude:

[ai]

provider = "claude"

api_key = "sk-..."

model = "claude-3-5-haiku-latest"

max_tokens = 5000

# Or, to use a local Ollama:

[ai]

provider = "ollama"

model = "qwen2.5-coder:7b"

host = "http://localhost:11434"

# To use OpenAI or DeepSeek API:

[ai]

provider = "openai"

base_url = "https://api.deepseek.com"

api_key = "sk-..."

temperature = 0.0

max_tokens = 1024

# To Use Gemini API:

[ai]

provider = "gemini"

api_key = "..."

model = "gemini-2.0-flash"

# Advanced Parameters to tune the search:

[search]

initial_spread = 10 # How many random tries to make at depth=0.

random_spread = 2 # How many random tries to make before selecting the best move.

max_depth = 30 # Maximum depth of the search tree.

max_temperature = 1 # Tries random temperature, up to this value.

max_minutes = 60 # Stop after 60 minutes of search.

# Some models perform better at lower temps, in general

# Higher temperature = more exploration.

cache = true # Caches AI responses to a local file to speed up re-runs and

# save money.By default, Unvibe runs the tests on your local machine. This is very risky, because you're running code generated by an LLM: it may try anything. In practice, most of the projects I work on, run on Docker, so I let Unvibe run wild inside a Docker container, where it can't do any harm. This is the recommended way to run Unvibe. Another practical solution is to create a new user with limited permissions on your machine, and run Unvibe as that user.

Similar approaches have been explored in various research papers from DeepMind and Microsoft Research:

-

FunSearch: Mathematical discoveries from program search with large language models (Nature)

-

Gold-medalist Performance in Solving Olympiad Geometry with AlphaGeometry2 (Arxiv)

-

AI achieves silver-medal standard solving International Mathematical Olympiad problems (DeepMind)

-

LLM-based Test-driven Interactive Code Generation: User Study and Empirical Evaluation (Axiv)

For more information, check the original article: Unvibe: Generate code that passes unit-tests